In general, it's a known fact that RAID 10 (aka VRAID1) outperforms RAID 5 (aka VRAID5) for write-heavy workloads, but at a higher cost per GB. I wanted to revisit the cost-benefit analysis that led us to use RAID 5, because I've always used RAID 10 for at least logs and tempdb in the past, and for all SQL data when possible.

To make sure that my analysis was relevant to our particular IO load, I planned to generate load on each type of drive using IOMeter, for which I would need to construct an appropriate workload. I recorded a Counter Log with the following counters from each drive using Windows Performance Monitor from our main OLTP server over the course of several days.

- Avg Disk Bytes/Read

- Avg Disk Bytes/Write

- Avg Disk Queue Length

- Disk Reads/sec

- Disk Writes/sec

To do this, I first bucketed each reading of Avg Disk Bytes/[Read, Write] into a size category (.5K, 1K, ...64K, 128K, etc.) and chose enough categories to add up to 80% of the total in order to determine what size IOs to generate.

Then I added the averages of Disk Reads/sec and Disk Writes/sec in order to get the percentages of reads and writes against each disk, and used the average of Avg Disk Queue Length to determine how many IOs outstanding to queue up.

Finally, I ran through this whole exercise again after deciding to filter for Disk Queues > 2. I did this because I figured that the SAN probably wasn't having any trouble when disk queues were low - RAID 5 vs RAID 1 would only show a difference in latency during those times. The trouble comes when loads (for which disk queues are a proxy) are high; that's when you need maximum throughput and minimum response time.

After all this, I came up with the following characteristic workloads for our system (yours may vary substantially):

TEMPDB

Reads - 2%

- 68% 64K read size

- 32% 8K read size

Writes - 98%

- 83% 64K write size

- 17% 512B write size

600 IOs outstanding

LOGS

Reads - 36%

- 87% 128K read size

- 13% 1024K read size

Writes - 64%

- 81% 64K write size

- 19% 16K write size

4 IOs outstanding

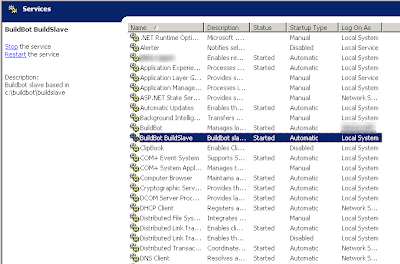

Next I worked with our Systems Engineering group to create a VRAID5 and a VRAID1 drive of the same size and attached both to the same server. I created Access Specifications with IOMeter for each workload shown above (see image below), and then ran those Access Specifications against each drive with the requisite number of IOs outstanding.

This yielded some fairly conclusive results. I won't post the actual throughput numbers, but here are the margins by which VRAID1 outperformed VRAID5 for each workload:

This yielded some fairly conclusive results. I won't post the actual throughput numbers, but here are the margins by which VRAID1 outperformed VRAID5 for each workload:TEMPDB

| IOPS | MB/s | Avg IO Response Time |

| 117.65% | 117.55% | 51.48% |

LOGS

| IOPS | MB/s | Avg IO Response Time |

| 80.05% | 65.63% | 39.69% |

So according to my testing, VRAID1 is vastly superior for these types of workloads, to the point where it's probably worth the higher cost per GB. We'll see how this bears out in further testing and in real-world usage shortly...